【问题实操】银河麒麟高级服务器操作系统实例,CPU软锁报错触发宕机

1.服务器环境以及配置

处理器:

Kunpeng 920

内存:

256G DDR4

整机类型/架构:

TaiShan 200 (Model 2280)

内核版本

4.19.90-23.8.v2101.ky10.aarch64

2.问题现象描述

两台搭载麒麟v10 sp1的机器均在系统CPU软锁报错时,触发了softlockup_panic宕机。

3.问题分析

3.1.分析kylin1日志 3.1.1. 分析vmcore-dmesg日志

Dec 4 23:06:46 localhost sh[2145769]: [docker][INFO] do health check success [200]

Dec 4 23:06:47 localhost kernel: [2805817.816879] watchdog: BUG: soft lockup - CPU#17 stuck for 22s! [migration/17:96]

Dec 4 23:06:47 localhost kernel: [2805817.824909] CPU: 17 PID: 96 Comm: migration/17 Kdump: loaded Tainted: G W L 4.19.90-23.8.v2101.ky10.aarch64 #1

Dec 4 23:06:47 localhost kernel: [2805817.824910] Hardware name: Huawei TaiShan 200 (Model 2280)/BC82AMDGK, BIOS 1.77 06/28/2021

Dec 4 23:06:47 localhost kernel: [2805817.824911] pstate: 60c00009 (nZCv daif +PAN +UAO)

Dec 4 23:06:47 localhost kernel: [2805817.824916] pc : multi_cpu_stop+0xe0/0x170

Dec 4 23:06:47 localhost kernel: [2805817.824917] lr : 0x2

Dec 4 23:06:47 localhost kernel: [2805817.824918] sp : ffff803fc73f3d70

Dec 4 23:06:47 localhost kernel: [2805817.824933] Call trace:

Dec 4 23:06:47 localhost kernel: [2805817.824935] multi_cpu_stop+0xe0/0x170

Dec 4 23:06:47 localhost kernel: [2805817.824936] cpu_stopper_thread+0xd4/0x160

Dec 4 23:06:47 localhost kernel: [2805817.824938] smpboot_thread_fn+0x184/0x1b8

Dec 4 23:06:47 localhost kernel: [2805817.824941] kthread+0x134/0x138

Dec 4 23:06:47 localhost kernel: [2805817.824943] ret_from_fork+0x10/0x18

Dec 4 23:06:51 localhost kernel: [2805821.817361] watchdog: BUG: soft lockup - CPU#34 stuck for 23s! [runc:[2:INIT]:826254]

Dec 4 23:06:51 localhost kernel: [2805821.825827] CPU: 34 PID: 826254 Comm: runc:[2:INIT] Kdump: loaded Tainted: G W L 4.19.90-23.8.v2101.ky10.aarch64 #1

Dec 4 23:06:51 localhost kernel: [2805821.825828] Hardware name: Huawei TaiShan 200 (Model 2280)/BC82AMDGK, BIOS 1.77 06/28/2021

Dec 4 23:06:51 localhost kernel: [2805821.825840] pc : get_dominating_id+0x4c/0xb8

Dec 4 23:06:51 localhost kernel: [2805821.825847] lr : show_mountinfo+0x10c/0x2d8

Dec 4 23:06:51 localhost kernel: [2805821.825848] sp : ffffa03ca09bfc60

Dec 4 23:06:51 localhost kernel: [2805821.825848] x29: ffffa03ca09bfc60 x28: 00000040009652da

Dec 4 23:06:51 localhost kernel: [2805821.825850] x27: 0000000000080b26 x26: 0000000000000001

Dec 4 23:06:51 localhost kernel: [2805821.825851] x25: ffff000009114000 x24: ffffa0218a4cc808

Dec 4 23:06:51 localhost kernel: [2805821.825852] x23: ffff803783107500 x22: ffffa0218a4cc808

Dec 4 23:06:51 localhost kernel: [2805821.825853] x21: ffffa02c64372680 x20: ffff803206622400

Dec 4 23:06:51 localhost kernel: [2805821.825854] x19: ffffa03e4a6f1080 x18: 0000000000000000

Dec 4 23:06:51 localhost kernel: [2805821.825855] x17: 0000000000000000 x16: 0000000000000000

Dec 4 23:06:51 localhost kernel: [2805821.825856] x15: ffffffffffffffff x14: ffffa03f26920000

Dec 4 23:06:51 localhost kernel: [2805821.825857] x13: ffffa03f26917b88 x12: 0000000000000008

Dec 4 23:06:51 localhost kernel: [2805821.825858] x11: 0101010101010101 x10: 7f7f7f7f7f7f7f7f

Dec 4 23:06:51 localhost kernel: [2805821.825859] x9 : fefefefefefeff64 x8 : 7f7f7f7f7f7f7f7f

Dec 4 23:06:51 localhost kernel: [2805821.825860] x7 : 6c6873606b64712b x6 : ffffa03f26917c08

Dec 4 23:06:51 localhost kernel: [2805821.825861] x5 : 0000000000000000 x4 : ffffffffffffffff

Dec 4 23:06:51 localhost kernel: [2805821.825862] x3 : 0000000000000065 x2 : 0000000000000009

Dec 4 23:06:51 localhost kernel: [2805821.825863] x1 : ffffa0218a4cc808 x0 : ffff803f7ace8180

Dec 4 23:06:51 localhost kernel: [2805821.825865] Call trace:

Dec 4 23:06:51 localhost kernel: [2805821.825867] get_dominating_id+0x4c/0xb8

Dec 4 23:06:51 localhost kernel: [2805821.825868] show_mountinfo+0x10c/0x2d8

Dec 4 23:06:51 localhost kernel: [2805821.825872] m_show+0x30/0x40

Dec 4 23:06:51 localhost kernel: [2805821.825875] seq_read+0x2b4/0x4a8

Dec 4 23:06:51 localhost kernel: [2805821.825881] __vfs_read+0x48/0x80

Dec 4 23:06:51 localhost kernel: [2805821.825882] vfs_read+0x94/0x150

Dec 4 23:06:51 localhost kernel: [2805821.825884] ksys_read+0x5c/0xc8

Dec 4 23:06:51 localhost kernel: [2805821.825885] __arm64_sys_read+0x24/0x30

Dec 4 23:06:51 localhost kernel: [2805821.825890] el0_svc_common+0x78/0x130

Dec 4 23:06:51 localhost kernel: [2805821.825891] el0_svc_handler+0x38/0x78

Dec 4 23:06:51 localhost kernel: [2805821.825894] el0_svc+0x8/0x1b0

Dec 4 23:06:53 localhost systemd[1]: titanagent_check_exception.service: Succeeded.

Dec 4 23:06:53 localhost systemd[1]: Started titanagent check exception.runc出现大量的软锁,软锁日志如下 pc : get_dominating_id+0x58/0xb8, lr : show_mountinfo+0x10c/0x2d8,从lr寄存器看,是在查看挂载信息。

[2935812.854382] watchdog: BUG: soft lockup - CPU#55 stuck for 22s! [runc:[2:INIT]:926483]

[2936044.851805] watchdog: BUG: soft lockup - CPU#32 stuck for 22s! [runc:[2:INIT]:783242]

[2936096.851768] watchdog: BUG: soft lockup - CPU#41 stuck for 23s! [runc:[2:INIT]:858586]

[2936096.852292] watchdog: BUG: soft lockup - CPU#55 stuck for 23s! [runc:[2:INIT]:926483]

[2936096.852443] watchdog: BUG: soft lockup - CPU#59 stuck for 23s! [runc:[2:INIT]:890626]

[2935608.854965] watchdog: BUG: soft lockup - CPU#30 stuck for 22s! [runc:[2:INIT]:895896]

[2935608.863423] CPU: 30 PID: 895896 Comm: runc:[2:INIT] Kdump: loaded Tainted: G W L 4.19.90-23.8.v2101.ky10.aarch64 #1

[2935608.863424] Hardware name: Huawei TaiShan 200 (Model 2280)/BC82AMDGK, BIOS 1.77 06/28/2021

[2935608.863426] pstate: 20400009 (nzCv daif +PAN -UAO)

[2935608.863431] pc : get_dominating_id+0x58/0xb8

[2935608.863434] lr : show_mountinfo+0x10c/0x2d8

[2935608.863434] sp : ffffa03f34f1fc60

[2935608.863436] x29: ffffa03f34f1fc60 x28: 0000004000f4b26a

[2935608.863437] x27: 0000000000260b96 x26: 0000000000000001

[2935608.863438] x25: ffff000009114000 x24: ffff803782bf3d08

[2935608.863439] x23: ffffa021a417a680 x22: ffff803782bf3d08

[2935608.863440] x21: ffffa02c6579bf80 x20: ffff802363223a80

[2935608.863441] x19: ffffa0218fc72880 x18: 0000000000000000

[2935608.863442] x17: 0000000000000000 x16: 0000000000000000

[2935608.863443] x15: ffffffffffffffff x14: ffffa03f73540000

[2935608.863444] x13: ffffa03f7353d768 x12: 0000000000000008

[2935608.863444] x11: 0101010101010101 x10: 7f7f7f7f7f7f7f7f

[2935608.863445] x9 : fefefefefefeff64 x8 : 7f7f7f7f7f7f7f7f

[2935608.863446] x7 : 6c6873606b64712b x6 : ffffa03f7353d7e8

[2935608.863447] x5 : 0000000000000000 x4 : ffffffffffffffff

[2935608.863448] x3 : 0000000000000065 x2 : 0000000000000009

[2935608.863449] x1 : ffff803782bf3d08 x0 : ffff803786efd480

[2935608.863451] Call trace:

[2935608.863453] get_dominating_id+0x58/0xb8

[2935608.863454] show_mountinfo+0x10c/0x2d8

[2935608.863456] m_show+0x30/0x40

[2935608.863458] seq_read+0x2b4/0x4a8

[2935608.863461] __vfs_read+0x48/0x80

[2935608.863462] vfs_read+0x94/0x150

[2935608.863463] ksys_read+0x5c/0xc8

[2935608.863464] __arm64_sys_read+0x24/0x30

[2935608.863466] el0_svc_common+0x78/0x130

[2935608.863468] el0_svc_handler+0x38/0x78

[2935608.863469] el0_svc+0x8/0x1b0整理堆栈的函数调用,如下所示:

__arm64_sys_read+0x24/0x30

-> ksys_read+0x5c/0xc8

-> vfs_read+0x94/0x150

__vfs_read+0x48/0x80 //虚拟文件系统层(VFS)的读取操作函数

seq_read+0x2b4/0x4a8 //通常用于从顺序文件中读取数据,可能是与/proc文件系统相关的操作

m_show+0x30/0x40 //可能是一个用于将信息显示到某个输出(如控制台或日志)的函数

show_mountinfo+0x10c/0x2d8 //显然是用来展示挂载信息的,/proc/[pid]/mountinfo文件包含了进程的挂载点信息。这表明软锁可能发生在处理文件系统挂载相关的操作时。

get_dominating_id+0x54/0xb8 //可能与获取某种类型的ID(可能是进程ID、线程ID或资源ID)有关

查看当时的内存使用情况,可见当时的空闲内存还有很多。

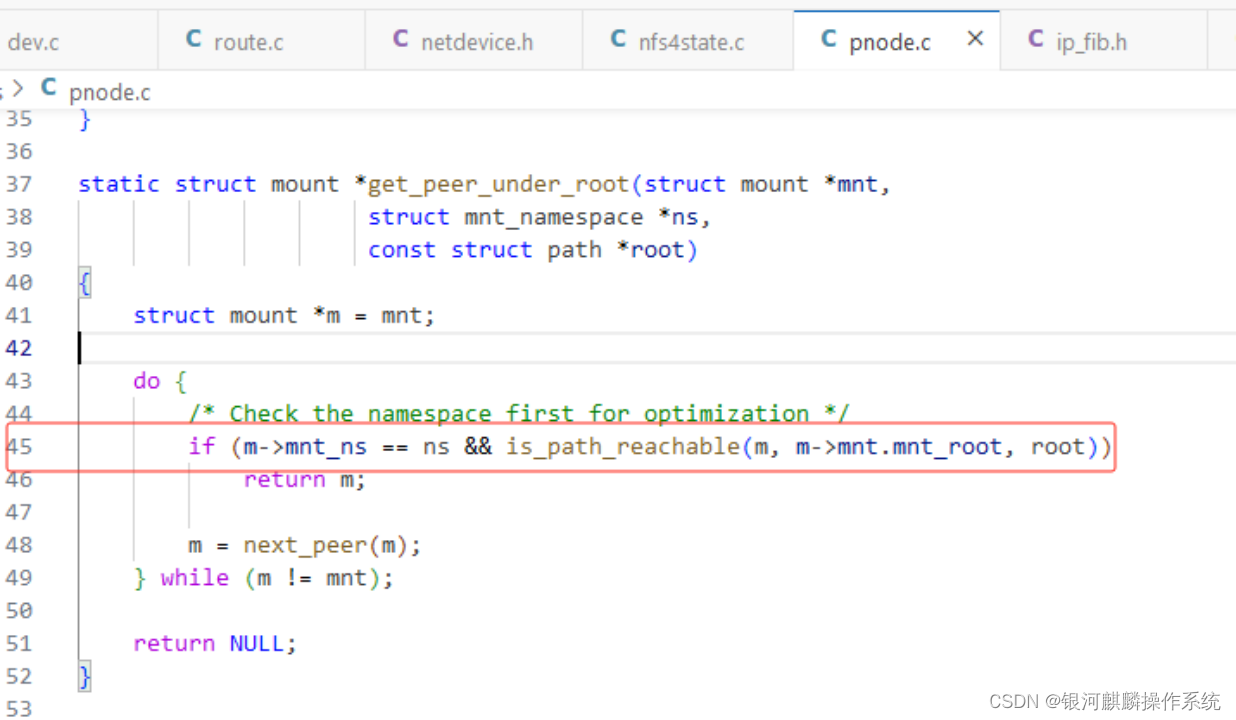

分析软锁堆栈的PC寄存器 get_dominating_id+0x58/0xb8,可见一直在执行/fs/pnode.c: 45

查看/fs/pnode.c: 45的源码如下,可见当时卡在do{}while循环出不来,这是因为m = next_peer(m);一直找不到尽头,最后内核一直在循环遍历。

vmcore中查看mount信息

发现有很多

/dev/mapper/klas-root 挂载到/mnt/paas/kubernetes/kubelet/plugins/kubernetes.io/csi/pv。这是不正常的。

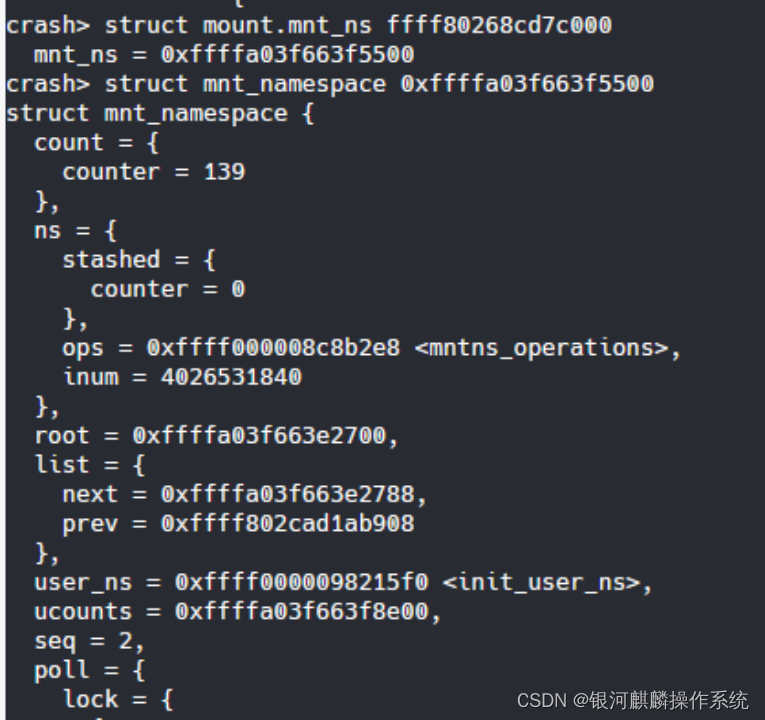

查看各个struct mount.mnt_ns,发现都是相同的,说明struct mnt_namespace相同。

使用如下脚本复现了重复挂载

4.问题分析结果

分析两台机器的vmcore-dmesg.txt日志。软锁可能发生在runc进程尝试从某个文件(可能是与文件系统挂载相关的文件)中读取数据时。由于某种原因,这个读取操作卡住了。

分析vmcore,查看软锁时的堆栈情况,可见 pc : get_dominating_id+0x58/0xb8, lr : show_mountinfo+0x10c/0x2d8,从lr寄存器看,是在查看挂载信息出现了软锁。

查看pc寄存器所对应的内核代码,可见当时正处于一个查看mount的循环中无法出来。使用crash分析vmcore发现mount过多,有无数条/dev/mapper/klas-root 挂载到/mnt/paas/kubernetes/kubelet/plugins/kubernetes.io/csi/pv的记录,导致遍历这些记录的时候,内核进入一个死循环,最后触发软锁。

需应用端检查是否存在类似于循环挂载/dev/mapper/klas-root 挂载到/mnt/paas/kubernetes/kubelet/plugins/kubernetes.io/csi/pv的情况。例如循环执行 mount --bind / /mnt/paas/kubernetes/kubelet/plugins/kubernetes.io/csi/pv。

Ongwu博客 版权声明:以上内容未经允许不得转载!授权事宜或对内容有异议或投诉,请联系站长,将尽快回复您,谢谢合作!